Probabilistic Graphical Models with message propagation are a very powerful paradigm to build robust models for real phenomena.

Basic methods

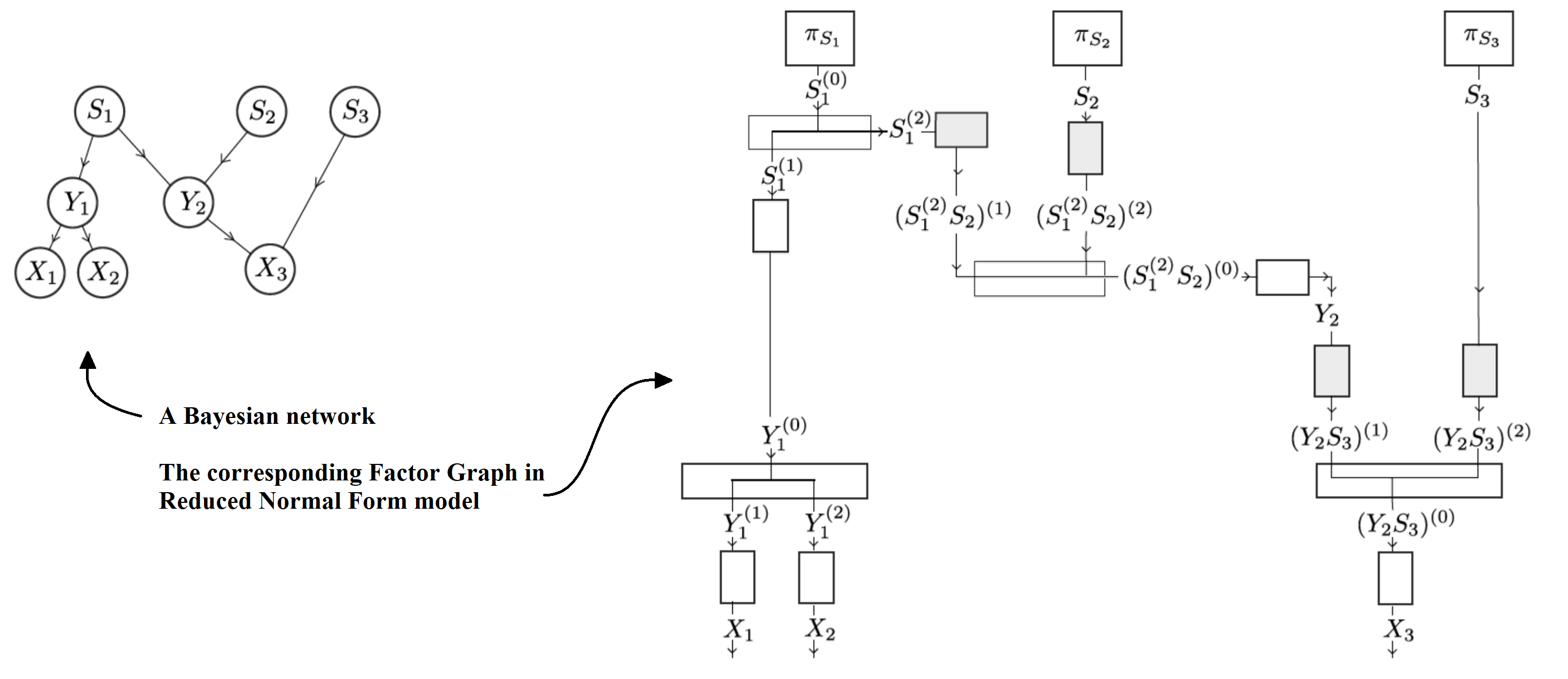

A Bayesian directed graph represents our knowledge about the dependence among a set of random variables. Nodes represent the variables and the arches the conditional probability functions. A Bayesian graph can be transformed into a Factor Graph (FG) in which variables are branches and blocks are the probability functions. Furthermore, the FG can be transformed to an interconnection of only SISO (Single Input Single Output) blocks and diverters (FG in reduced normal form (FG-Rn). Such architectures are very flexible for handling “probability message propagation” as a Bayesian graph can be used for inference, generation, corrections, using probabilities that travels bi-directionally in the system. The basic sum-product operations are rigorous translation of Bayes’ theorem and marginalization.

- G. Di Gennaro, A. Buonanno, F.A.N. Palmieri, "Optimized Realization of Bayesian Networks in Reduced Normal Form using Latent Variable Model," in Soft Computing, Springer, pp. 1-12, 17 Mar. 2021. DOI: 10.1007/s00500-021-05642-3 [Preliminary Version on arXiv:1901.06201] - Link to GitHub Repository

- G. Di Gennaro, A. Buonanno, F.A.N. Palmieri, "Computational Optimization for Normal Form Realization Of Bayesian Model," Proceedings of 2018 workshop on Machine Learning for Signal Processing (MLSP2018), Aalborg, Denmark, 17-20 Sept. 2018. DOI: 10.1109/MLSP.2018.8517090

- F. A.N. Palmieri, "A Comparison of Algorithms for Learning Hidden Variables in Bayesian Factor Graphs in Reduced Normal Form," in IEEE Transactions on Neural Networks and Learning Systems, vol. 27, no. 11, pp. 2242-2255, Nov. 2016. DOI: 10.1109/TNNLS.2015.2477379

- Buonanno A., Palmieri F.A.N., "Simulink Implementation of Belief Propagation in Normal Factor Graphs". In: Bassis S., Esposito A., Morabito F. (eds) Advances in Neural Networks: Computational and Theoretical Issues. Smart Innovation, Systems and Technologies, vol 37. Springer, Cham (WIRN 2014); DOI: https://doi.org/10.1007/978-3-319-18164-6_2; Publisher Name: Springer, Cham; Print ISBN: 978-3-319-18163-9; Online ISBN: 978-3-319-18164-6. - Link to GitHub Repository

- F. A. N. Palmieri, "A Comparison of Algorithms for Learning Hidden Variables in Normal Graphs," submitted for journal publication, available on-line at arXiv: 1308.5576v1 [stat.ML] 26 Aug 2013.

- F. Palmieri, "Notes on Cutset Conditioning on Factor Graphs with Cycles," in IOS Press in the KBIES book series, Neural Nets WIRN09 - Proceedings of the 19th Italian Workshop on Neural Nets, Vietri sul Mare, Salerno, Italy, May 28–30 2009, Edited by Bruno Apolloni, Simone Bassis, Carlo F. Morabito, ISBN 978-1-60750-072-8.

- F. Palmieri, D. Mattera, P. Salvo Rossi, G. Romano, "La Costruzione di Memorie Associative su Grafi Fattoriali," in Modelli, sistemi e applicazioni di Vita Artificiale e Computazione Evolutiva, WIVACE 2009, a cura di Orazio Miglino, Michela Ponticorvo, Angelo Rega, Franco Rubinacci, Atti del VI Workshop Italiano di Vita Artificiale e Computazione Evolutiva. Napoli 23-25 Novembre 2009, Fridericiana Editrice Universitaria, ISBN: 978-88-8338-091-4.

- F. Palmieri, "Notes on Factor Graphs," in New Directions in Neural Networks with IOS Press in the KBIES book series, Proceedings of WIRN 2008, Vietri sul mare, June 2008; ISBN 978-1-58603-984-4.

- Francesco A. N. Palmieri, "Learning Non-Linear Functions with Factor Graphs," in IEEE Transactions on Signal Processing, Vol.61, N. 17, pp. 4360 - 4371, 2013, T-SP-14461-2012.R2; DOI: 10.1109/TSP.2013.2270463

- Francesco A. N. Palmieri and Alberto Cavallo, "Probability Learning and Soft Quantization in Bayesian Factor Graphs," in Neural Nets and Surroundings, Proc. of 22nd Italian Workshop on Neural Nets, WIRN 2012, May 17-19, Vietri sul Mare, Salerno, Italy, pp 3-10; DOI: 10.1007/978-3-642-35467-0_1; ISBN: 978-3-642-35466-3; Springer; Series: Smart Innovation, Systems and Technologies, Vol. 19, Apolloni, B.; Bassis, S.; Esposito, A.; Morabito, F.C. (Eds.);

- F. A. N. Palmieri, D. Ciuonzo, D. Mattera, G. Romano, P. Salvo Rossi, "From Examples to Bayesian Inference," Preceedings of 21th Workshop on Neural Networks, Vietri sul Mare, Salerno, Italy, June 3-5, 2011, IOS Press; ISBN: 978-1-60750-971-4.

- F. Palmieri, G. Romano, P. Salvo Rossi, D. Mattera, ''Building a Bayesian Factor Tree From Examples,'' Proceedings of the the 2nd International Workshop on Cognitive Information Processing, 14-16 June, 2010 Elba Island (Tuscany) - Italy; 2150-4938; ISBN: 978-1-4244-6457-9; DOI: 10.1109/CIP.2010.5604232.

Context Analysis

Bayesian networks can also be learned from examples. We have contributed to the field with basic theory and with applications to this very promising paradigm for the design of artificial intelligence systems.

- A. Buonanno, A. Nogarotto, G. Cacace, G. Di Gennaro, F.A.N. Palmieri, M. Valenti and G. Graditi, "Bayesian Feature Fusion using Factor Graph in Reduced Normal Form," in Applied Sciences, MDPI, vol. 11, 1934, 22 Feb. 2021. DOI: 10.3390/app11041934

- A. Buonanno, P. Iadicicco, G. Di Gennaro, F.A.N. Palmieri, "Context Analysis Using a Bayesian Normal Graph," in Neural Advances in Processing Nonlinear Dynamic Signals (Smart Innovation, Systems and Technologies 102), A. Esposito, M. Faundez-Zanuy, F.C. Morabito and E. Pasero, Eds., Springer, 2018, pp. 85–96. DOI: 10.1007/978-3-319-95098-3_8 - Also presented at the 27th Workshop on Neural Networks (WIRN), Vietri sul Mare, SA, June 14-16, 2017.

- Buonanno, L. di Grazia and F. A. N. Palmieri, "Bayesian Clustering on Images with Factor Graphs in Reduced Normal Form," in S. Bassis, A. Esposito, F.C. Morabito, and E. Pasero, Advances in Neural Networks, (WIRN 2015), vol. 54, pp. 57-65, ISBN: 978-331933746-3; DOI: 10.1007/978-3-319-33747-0_6; Springer Science 2016.

Discrete Independent Component Analysis (DICA)

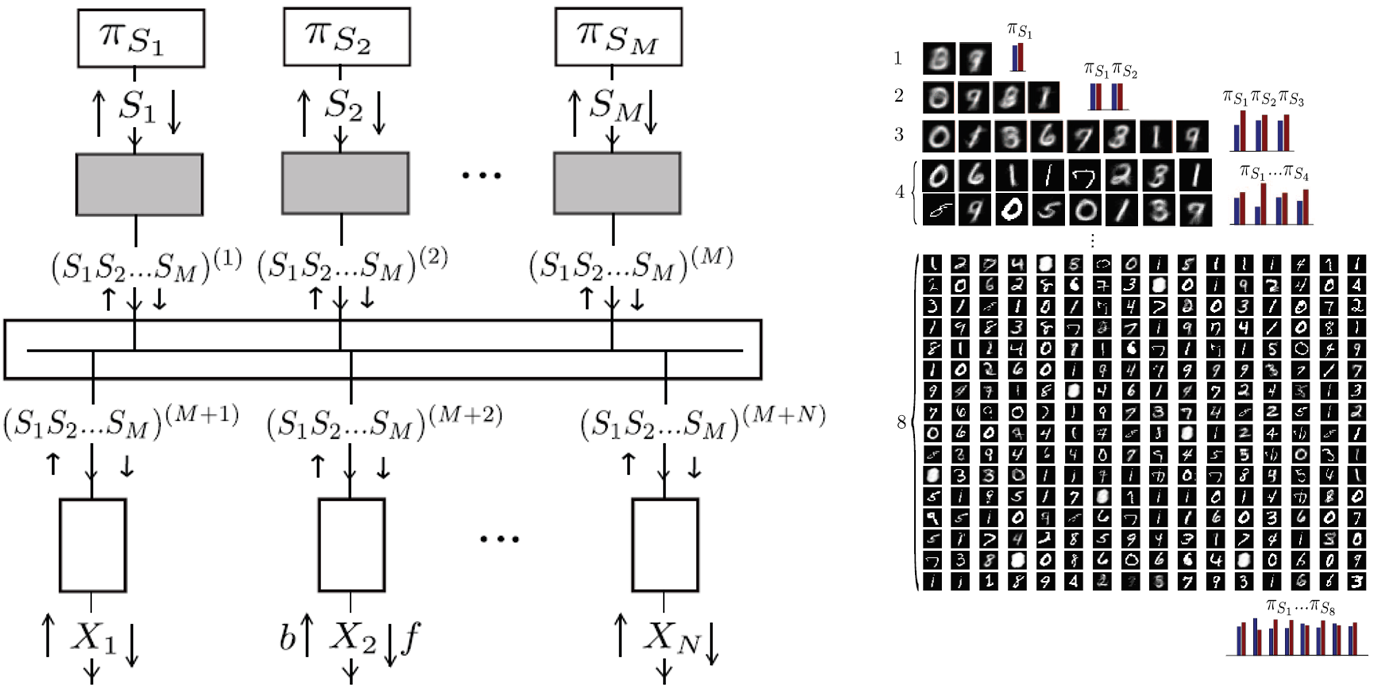

Using this paradigm several inference tasks (classification, pattern completion, pattern correction) can be performed in a unified framework injecting in the network the evidence at any arbitrary point, let the messages to propagate and collecting the results of the messages’ propagation.

- F. A. N. Palmieri and A. Buonanno, "Discrete independent component analysis (DICA) with belief propagation," 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, Sept. 17-20, 2015, pp. 1-6; doi: 10.1109/MLSP.2015.7324364. Also available on ArXiv http://arxiv.org/abs/1505.06814 since May 2015.

Multi-Layer Bayesian Networks

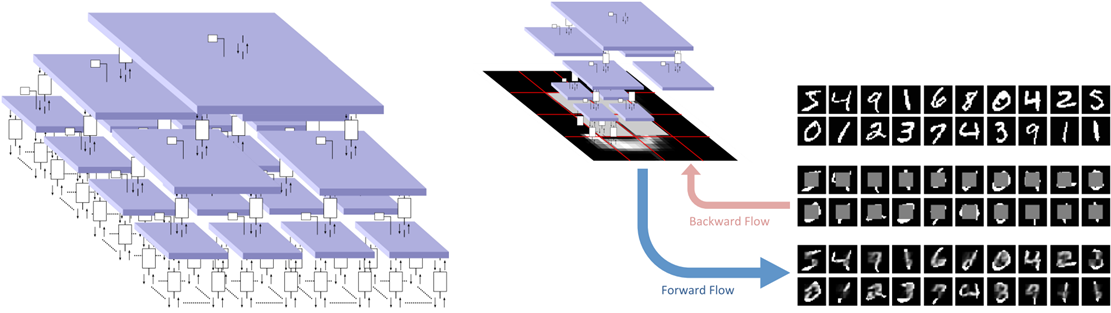

We have applied LVMs to large number of applications as: clustering of images, scene understanding, discrete independent component analysis and text correction. Moreover, LVM can be seen as a basic building block for Deep Learning architecture.

- A. Buonanno and F. A. N. Palmieri, "Two-dimensional multi-layer Factor Graphs in Reduced Normal Form," 2015 International Joint Conference on Neural Networks (IJCNN), Killarney, 2015, pp. 1-6; doi: 10.1109/IJCNN.2015.7280415

- A. Buonanno, F. A. N. Palmieri, "Towards Building Deep Networks with Bayesian Factor Graphs," submitted for journal publication, Feb 2015, available at arXiv:1502.04492 [cs.CV]

- F. A. N. Palmieri and A. Buonanno, "Belief Propagation and Learning in Convolution Multi-layer Factor Graphs," Proceedings of 4th International Workshop on Cognitive Information Processing, CIP2014, May 26-28, 2014, Copenhagen, Denmark. DOI: 10.1109/CIP.2014.6844500.

Simulink Library

Each defined probabilistic architecture requires a custom belief propagation code to compute marginal and/or posterior probabilities of stochastic variables of interest.

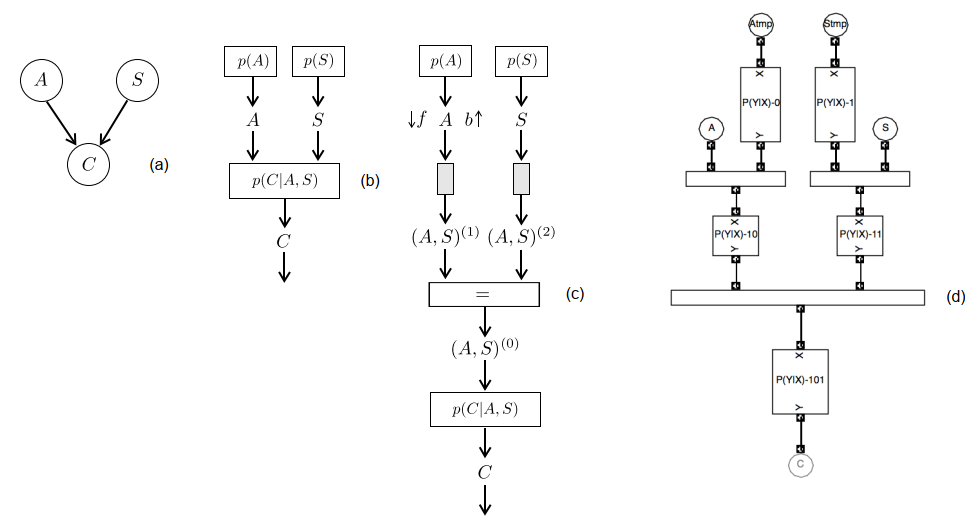

Starting from the consideration that the modularity - combining simpler parts to build a complex system - is a fundamental characteristics of the graphical models, we have built the Factor Graph in Reduced Normal Form Simulink Library. This library is a rapid prototyping environment to test a wide range of architectures simply by dragging and connecting building blocks (i.e., Variable blocks, Source blocks, SISO blocks, Replicator blocks).

In the following figure it is represented the Asbestos-Cancer-Smoking example: (a) Bayesian Graph Representation; (b) Factor Graph in Normal Form Representation; (c) Factor Graph in Reduced Normal Form Representation; (d) Network designed using Simulink Library

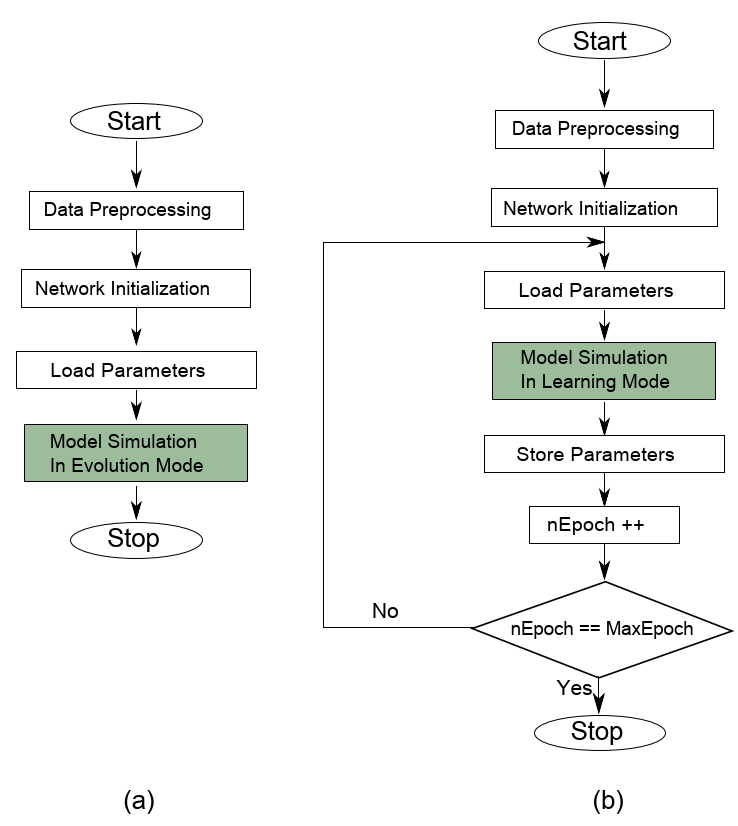

Once the user designs the model, it is possible to consider two modalities: inference (a) and learning (b).

Link to GitHub Repository

- Buonanno A., Palmieri F.A.N., "Simulink Implementation of Belief Propagation in Normal Factor Graphs". In: Bassis S., Esposito A., Morabito F. (eds) Advances in Neural Networks: Computational and Theoretical Issues. Smart Innovation, Systems and Technologies, vol 37. Springer, Cham (WIRN 2014); DOI: https://doi.org/10.1007/978-3-319-18164-6_2; Publisher Name: Springer, Cham; Print ISBN: 978-3-319-18163-9; Online ISBN: 978-3-319-18164-6.